The ITI-CERTH

The Information Technologies Institute (ITI) was founded in 1998 as a non-profit organisation under the auspices of the General Secretariat for Research and Technology of the Greek Ministry of Development, with its head office located in Thessaloniki, Greece. Since 10/03/2000 it is a founding member of CERTH.

CERTH/ITI is one of the leading Institutions of Greece in the fields of Informatics, Telematics and Telecommunications, with long experience in numerous European and national R&D projects. It is active in a large number of application sectors (energy, buildings and construction, health, manufacturing, robotics,(cyber)security, transport, smart cities, space, agri-food, marine and blue growth, water, etc.) and technology areas such as data and visual analytics, data mining, machine and deep learning, virtual and augmented reality, image processing, computer and cognitive vision, human computer interaction, IoT and communication technologies, navigation technologies, cloud and computing technologies, distributed ledger technologies (blockchain), (semantic) interoperability, system integration, mobile and web applications, hardware design and development, smart grid technologies and solutions and social media analysis.

CERTH/ITI has participated in more than 380 research projects funded by the European Commission (FP5-FP6-FP7 & H2020 – in more than 115 H2020 projects) and more than 100 research projects funded by Greek National Research Programmes and Consulting Subcontracts with the Private Sector (Industry). The current ITI projects consists of 116 ongoing projects (including EU and national projects, contracts and services), which are currently active with a total budget of 41.938k€.

For the last 10 years, the publication record of ITI includes more than 330 scientific publications in international journals, more than 780 publications in conferences and 100 books and book chapters. These works have been cited in more than 7.500 times.

CERTH/ITI has a staff of 393 people (including 9 researchers, 284 research assistants, 80 Postdoctoral Research Fellows, 10 Collaborative Faculty Members, 13 Administrative and Technical Employees).

Role within the project TeamAware

CERTH/ITI primarily partakes in WP6 (“Acoustic Detection System”) with developing algorithms for the detection and localisation of emergency events such as explosions, gunshots and snipers as well as human voices (e.g., screams asking for help) and whistling in the operations, using an acoustic vector sensor. In particular, CERTH/ITI is a Task Leader in T6.2 (“Overlapping acoustic event detection”) which aims the recognition of overlapping acoustic events and participates in Task 6.1 (“Acoustic event analysis and detection”) which focuses primarily on the design of the ADS architecture and the implementation of algorithms for Single-event detection (SED) and Task 6.3 (“System validation”) which evaluates the final system in the tested environment.

Moreover, CERTH/ITI participates in WP2 (“System Architecture Specification and Design”) which provides the requirements and architectural design for the ADS. This information will be the basis for the development of each of the components and algorithms in WP6. Also, WP6 will feed the platform software in WP10 (“TeamAware AI Platform Software”) and will help to increase the confidence of the detected events, by performing fusion with the other modalities (T10.1). Furthermore, WP6 will also feed the user interface in WP11 (“TeamAware AR/Mobile Interfaces”). Moreover, WP6 will have an interface with communication and network infrastructure from WP9 (“Secure and Standardised Communication Network") (T9.4). Finally, WP6 will take part in WP12 (“Integration and Test”) (T12.1) integration, test and WP13 (“Demonstration and Validation”) (T13.4) demonstration.

Acoustic Detection System

The objective of WP6 in the TeamAware project is to develop a system that can detect and localise emergency events for search and rescue purposes, including explosions, gunshots, human voices such as screams for help, and whistling. To achieve this objective, an Acoustic Vector Sensor (AVS) array will be mounted on an Unmanned Aerial Vehicle (UAV) along with a Raspberry Pi 4B for running the necessary algorithms. The needs and requirements of the Acoustic Detection System (ADS) for first responders have been defined in this context. Moreover, the role of the ADS in demonstration scenarios has been outlined, and communication protocols with the TeamAware platform have been established. Additionally, a literature review of audio-based surveillance systems similar to the one being developed has been conducted to gain insight into best practices and potential improvements.

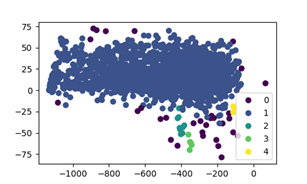

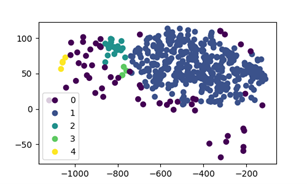

To address the objectives of the ADS, public datasets containing recordings of the required target classes were collected from AudioSet, FSD50K, and MIVIA. In order to improve model training, a preprocessing step was applied to remove outliers from the weakly labeled data in AudioSet and FSD50K. Subsequently, unsupervised anomaly detection methods, such as K-Means and DBSCAN based on the MFCC features of the recordings, were employed to create a more beneficial and accurate dataset for model training. Figure 1 displays the clustering application, demonstrating the distribution of MFCC characteristics for the Male Speech (a) and Traffic Noise (b) target classes. Recordings that belong to cluster 1 were retained and used for training in both cases.

One of the challenges in this task is the detection of overlapping sound events e.g., a scream overlapped with a police siren. Therefore, deep learning algorithms for single and overlapping sound events detection were implemented.

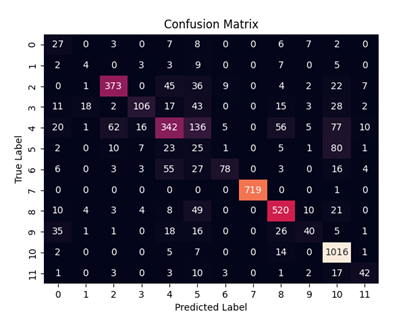

To extract features for the single event detection algorithm, Short-time Fourier transform (STFT) spectrogram magnitude representations were used and converted into 128 by 257 pixels grayscale images. Additionally, mel-spectrogram features were also extracted and tested in various deep learning architectures, including DenseNet-121, MobileNetV2, and a custom CNN model. The models were optimised to be lightweight for integration into the Raspberry Pi 4B. Loading and inference time were calculated, and F1-Scores were compared to select the most appropriate prediction model. The custom CNN model was integrated to run in real-time on the Raspberry Pi and output predictions every second. The performance of the model on unseen data in terms of precision, recall, and F1-Score were 0.6036, 0.5309, and 0.5464, respectively. The confusion matrix extracted from testing on unseen data is shown in Figure 2. The target classes, ranging from 0 to 11, represent Aircraft, Explosion, Female Speech, Gunshot, Male Speech, Background Noise, Screaming, Police siren, Thunder, Traffic Noise, UAV Noise, and Vehicle Horn, respectively.

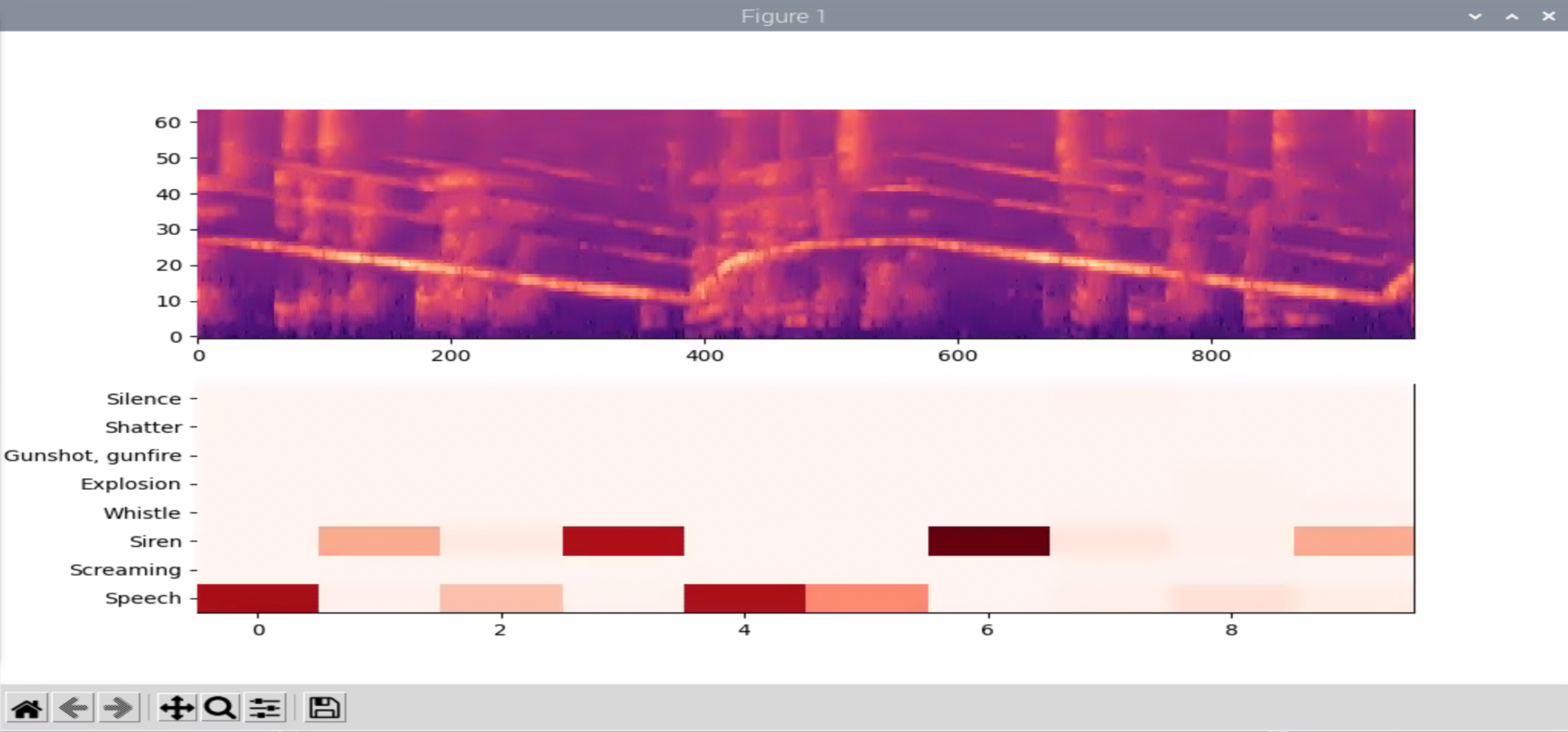

A custom two-dimensional CNN approach based on the YAMNet algorithm was employed for the overlapping event detection. To enhance the training process, an added class with mixup augmentation for overlapping events with a 30% mixture was introduced. Mel-spectrogram energies with a shape of 96 × 64 were used for feature extraction, and the algorithm produced classification results every second. The real-time integration of the algorithm was achieved on the ADS. Additionally, a visualisation tool was implemented to display the predicted labels. The tool uses a color scheme where the darker the color, the higher the probability score of the class. A screenshot of the tool is presented in Figure 3.

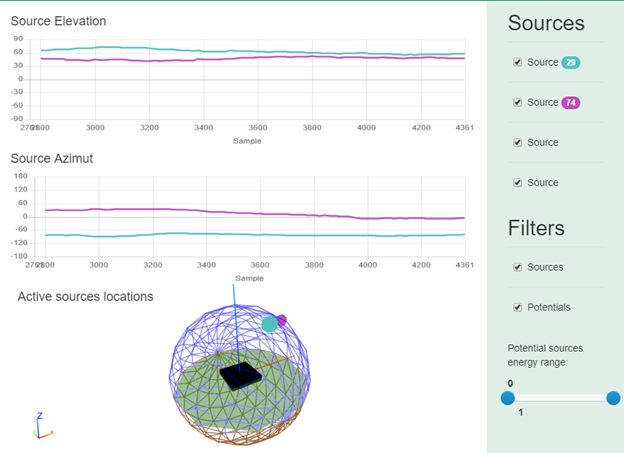

The ODAS framework, optimised to run on low-cost embedded hardware such as the Raspberry Pi, was utilised for sound source localisation. Sound source localisation, tracking, and sound source separation were among the features that the ODAS framework provided, along with a web interface for visualisation. For each microphone pair, the Generalized Cross-Correlation with Phase Transform (GCC-PHAT) algorithm was utilised to determine the time-delay, cross-correlation, and cross-correlation lag times between signals to accurately identify the location of sound sources in real-time. To customise the ODAS framework for specific microphone properties, the configuration file was edited. An illustration of the ODAS Studio Web interface is shown in Figure 4.

A mid-term demonstration of the ADS was completed on November 29, 2022 in Ankara. The demonstration showcased the system's operating principles and capabilities in detecting and localising single and overlapping sound events in a real-time test scenario within an indoor environment. To further validate the system, various sound event scenarios, either standalone or overlapping, were tested in an outdoor environment at CERTH premises. In addition, a data capture is scheduled to take place at CERTH premises to evaluate and test the ADS capabilities. During the capture, the ADS will be mounted on a UAV to detect and localise sound events from various distances, simulating the conditions of the final demonstration.